I was working on some Hadoop stuff recently, and as a total beginner, I found that the Map/Reduce concept was not easy to understand, despite the huge number of tutorials.

The Wordcount example is the ‘Hello World’ of Hadoop, but when I prepared a small presentation for my team, I realized it was not clear enough to explain Map/Reduce in 5 minutes.

As you may already know, the Map/Reduce pattern is a pattern that is very good for embarrassingly parallel algorithms.

Okayyyy but… What is an embarrassingly parallel algorithm?

Answer: It is an algorithm that is very well fit to be executed multiple times in parallel.

Ok then… what is very well suited for a parallel execution?

Answer: Any algorithm that’s working on data that can be isolated.

When writing an application, if you execute multiple occurrences of it at the same time, and they need to access some common data, there will be some clash, and you will have to handles cases like when one occurrence is changing some data while another other is reading it. You’re doing concurrency.

But if your occurrence is working on some data that no other occurrence will need, then you’re doing parallelism. Obviously you can scale further, since you do not have concurrency issues.

So let’s take an example, let’s say you have a list of cities, and each one has two attributes : the state it belongs to, and its yearly average temperature. E.g. : San Francisco : {CA, 58}

Now you want to calculate the yearly average temperature BY STATE.

Since you can group cities by state, and calculate the average temperature of a state without caring about cities of other states, you have a great embarrassingly parallel algorithm candidate.

If you wanted to do it sequentially, you would start with an empty list of yearly state average temperatures. Then you would iterate through the list of cities, and for each city, look at the state, then update the relevant yearly state average temperature.

Fortunately, it’s very easy to do it in parallel instead.

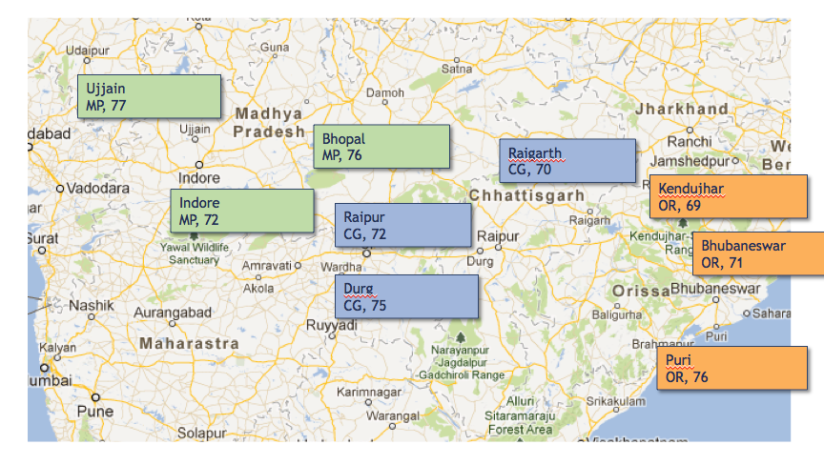

Let’s have a look at this map:

This is a map of India. There are several states : MP, CG, OR… And several cities, each one having {State, City average temperature} as value.

We want here to calculate the yearly average per state. In order to do that, we should group the city average temperatures by state, then calculate the average of each group.

We don’t really care about the city names, so we will discard those and keep only the state names and cities Temperatures.

Now we have only the data we need, and we can regroup the temperatures values by state. We’re going to get a list of temperatures averages for each state.

At this point, we have the data in good shape to actually do the maths… All we have to do is to calculate the average temperature for each state

That wasn’t hard.

We had some input data. We did a little regrouping, then we did the calculation. And all this could be executed in parallel (One parallel task for each state).

Well… That was Map/Reduce!

Let’s do it again

Map/Reduce has 3 stages : Map/Shuffle/Reduce

The Shuffle part is done automatically by Hadoop, you just need to implement the Map and Reduce parts.

You get input data as <Key,Value> for the Map part.

In this example, the Key is the City name, and the Value is the set of attributes : State and City yearly average temperature.

Since you want to regroup your temperatures by state, you’re going to get rid of the city name, and the State will become the Key, while the Temperature will become the Value.

Now, the shuffle task will run on the output of the Map task. It is going to group all the values by Key, and you’ll get a List<Value>

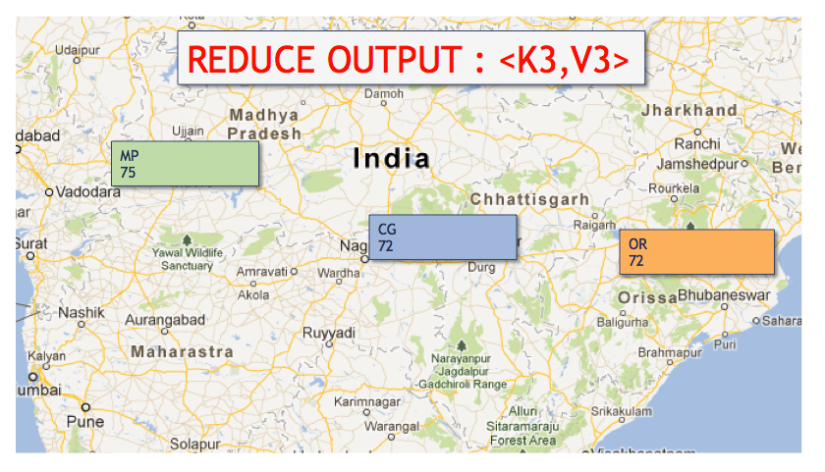

And this is what the Reduce task will get as input : the Key, List<Value> from the Shuffle task.

The Reduce task is the one that does the logic on the data, in our case this is the calculation of the State yearly average temperature.

And that’s what we will get as final output

This is how the data is shaped across Map/Reduce:

Mapper <K1, V1> —> <K2, V2>

Reducer <K2, List<V2>> —><K3, V3>

I hope this helped makes things a bit clearer about Map/Reduce, if you’re interested in explanations about Map Reduce v2/YARN, just leave a comment and I’ll post another entry.

PS: You can find the java code for this example here:

Great explanation. Can you share your Hadoop code for this?

Sure, here it is

https://github.com/jsoftbiz/mapreduce_1

This helped. Thanks!

Really useful explanation. Especially the distinction between parallelism and concurrency. Do continue writing, and thank you!

“If you can’t explain it simply, you don’t understand it well enough.”

It seems U make it!

How does map/reduce make this parallel?

Hi I got the concept but not able to run the example code. I have imported the project in eclipse. Ran the mvn install command. It resolved all the dependencey. but what next ? How can I see the map reduce running this code. Where is out txt file.

Hi,

you need to install Hadoop, create a HDFS partition, import the input data into it, launch the job (the code you got on github), then the output text file will be on HDFS.

If this is confusing, you should look at :

http://hadoop.apache.org/docs/stable/mapred_tutorial.html#Inputs+and+Outputs

I really appreciate this article. Until today I haven’t understood correctly how MapReduce works, until I found your page. Thank you.

Claudio Romo – same about me! Thanks a lot for such a nice tutorial!

Oh, and one question: why do we have but not – key seems to be unchanged after reduce step.

Hello, could you please post the principle of working of MR2/YARN architecture?

wonderful points altogether, you simply won a new reader.

What would you recommend in regards to your publish that you simply made some days ago?

Any certain?